portfolio

Bita Ashoori

💼 Data Engineering Portfolio

Designing scalable, cloud-native data pipelines that power decision-making across healthcare, retail, and public services.

About Me

I’m a Data Engineer based in Vancouver with over 5 years of experience spanning data engineering, business intelligence, and analytics). I specialize in designing cloud native ETL/ELT pipelines and automating data workflows that transform raw data into actionable insights.

My background includes work across healthcare, retail, and public-sector environments, where I’ve delivered scalable and reliable data solutions. With 3+ years building cloud data pipelines and 2+ years as a BI/ETL Developer, I bring strong expertise in Python, SQL, Apache Airflow, and AWS (S3, Lambda, Redshift).

I’m currently expanding my skills in Azure and Databricks, focusing on modern data stack architectures—including Delta Lake, Medallion design, and real-time streaming—to build next generation data platforms that drive performance, reliability, and business value.

Contact Me

🔗 Quick Navigation

- 🛒 Azure ADF Retail Pipeline

- 🏗️ End-to-End Data Pipeline with Databricks

- ☁️ Cloud ETL Modernization

- 🛠️ Airflow AWS Modernization

- ⚡ Real-Time Marketing Pipeline

- 🎮 Real-Time Player Pipeline

- 📈 PySpark Sales Pipeline

- 🏥 FHIR Healthcare Pipeline

- 🚀 Real-Time Event Processing with AWS Kinesis, Glue & Athena

- 🔍 LinkedIn Scraper (Lambda)

Project Highlights

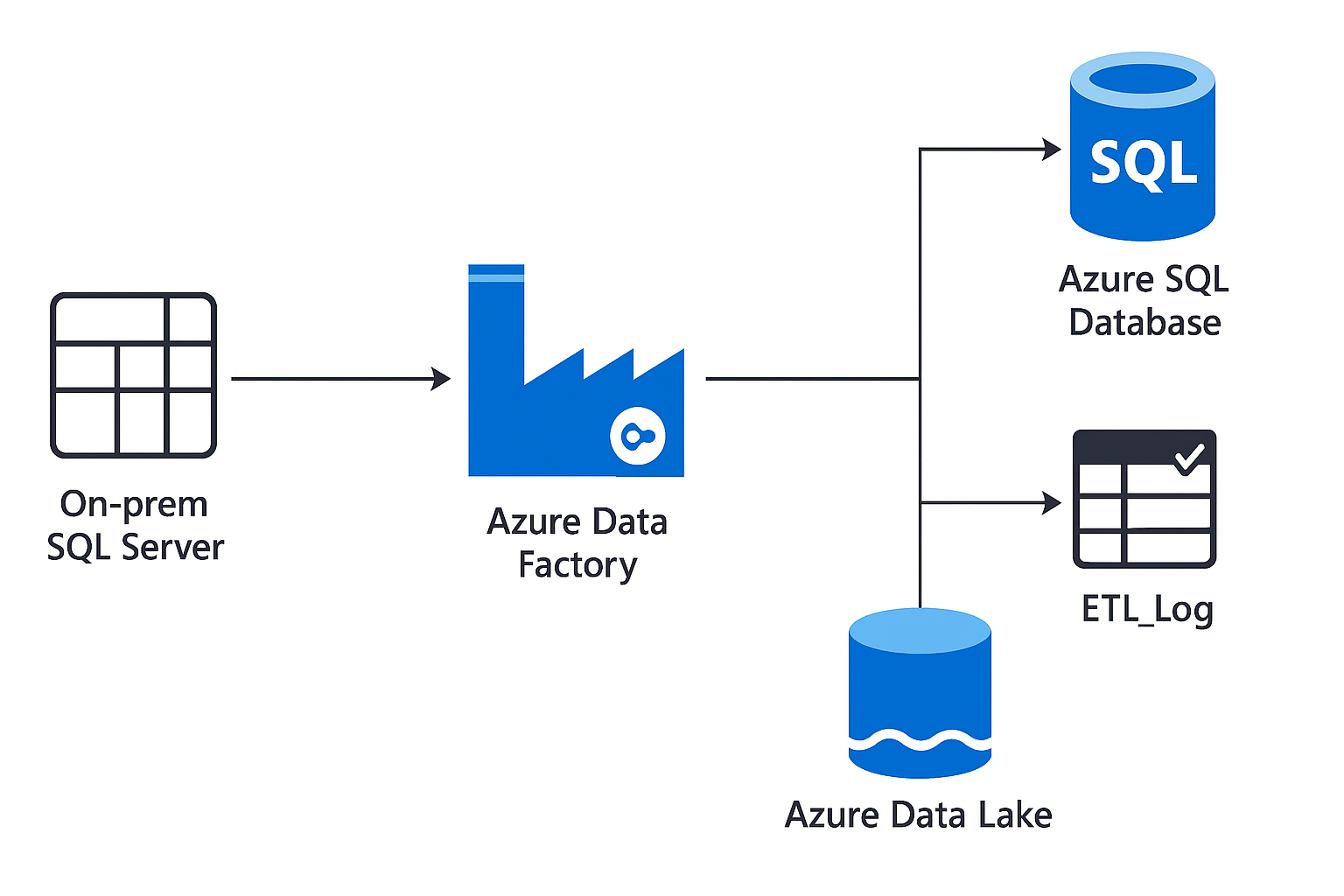

🛒 Azure ADF Retail Pipeline

Scenario: Retail organizations needed an automated cloud data pipeline to consolidate and analyze sales data from multiple regions.

📎 View GitHub Repo

Solution: Developed a cloud-native ETL pipeline using Azure Data Factory that ingests, transforms, and loads retail sales data from on-prem SQL Server to Azure Data Lake and Azure SQL Database. Implemented parameterized pipelines, incremental data loads, and monitoring through ADF logs.

✅ Impact: Improved reporting efficiency by 45%, automated data refresh cycles, and reduced manual dependencies.

🧰 Stack: Azure Data Factory · Azure SQL Database · Blob Storage · Power BI

🧪 Tested On: Azure Free Tier + GitHub Codespaces

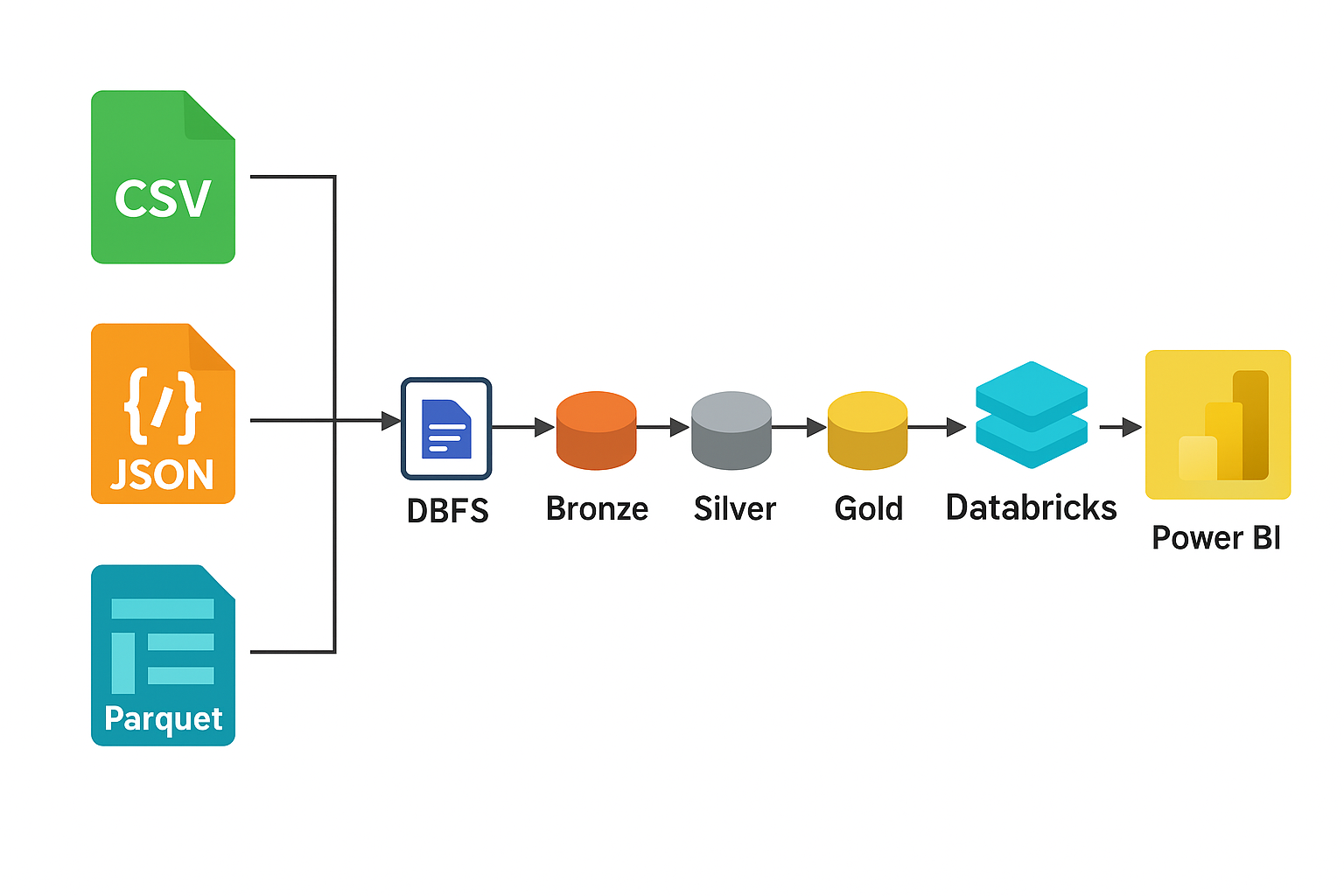

🏗️ End-to-End Data Pipeline with Databricks

Scenario: Designed and implemented a complete end-to-end ETL pipeline in Azure Databricks, applying the Medallion Architecture (Bronze → Silver → Gold) to build a modern data lakehouse for analytics.

📎 View GitHub Repo

Solution: Developed a multi-layer Delta Lake pipeline to ingest, cleanse, and aggregate retail data using PySpark and SQL within Databricks notebooks. Implemented data quality rules, incremental MERGE operations, and created analytical views for dashboards.

✅ Impact: Improved data reliability and reduced transformation latency by enabling efficient, governed, and automated data processing in the Databricks ecosystem.

🧰 Stack: Azure Databricks, Delta Lake, PySpark, Spark SQL, Unity Catalog, Power BI Cloud

🧪 Tested On: Azure Databricks Community Edition + GitHub Codespaces

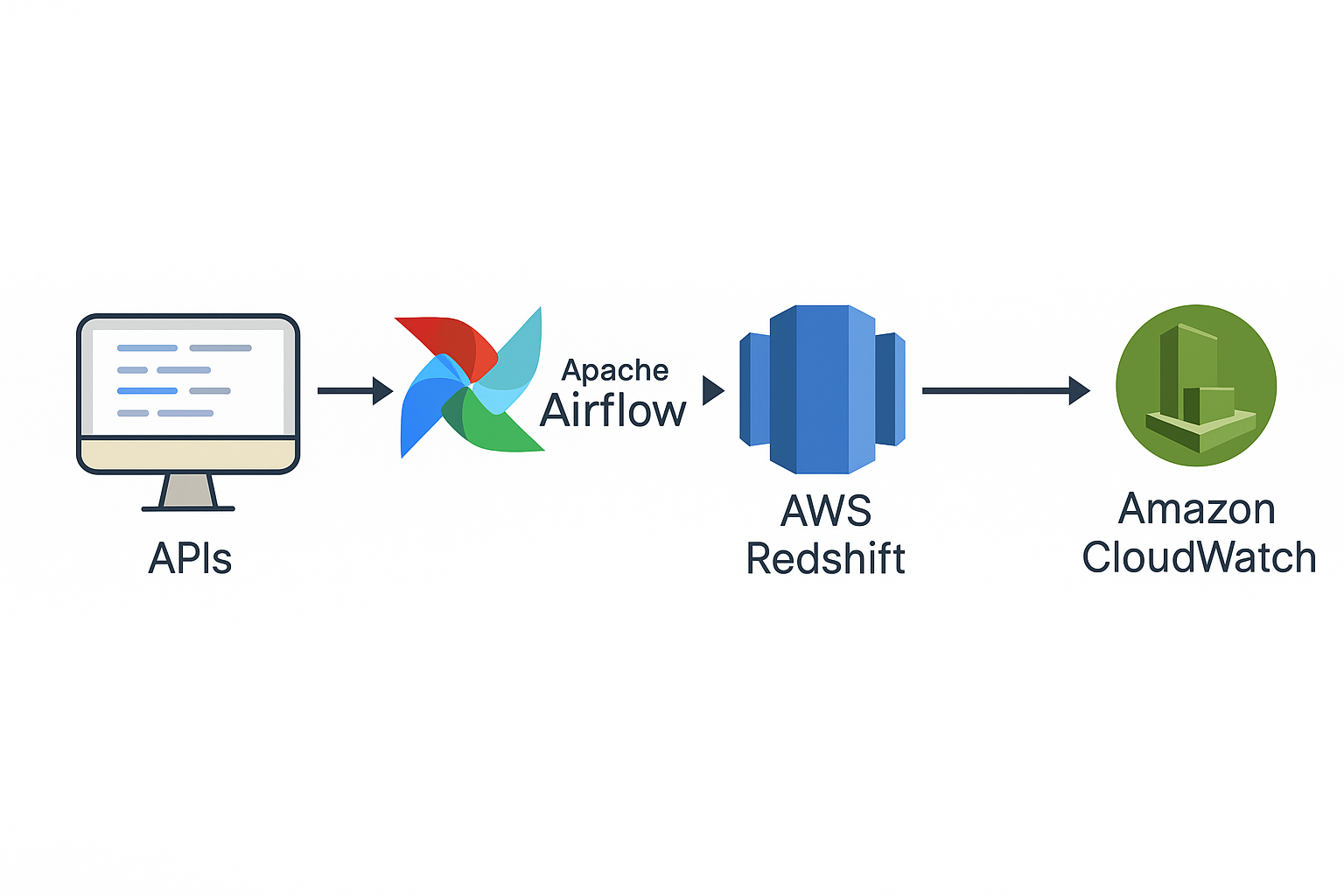

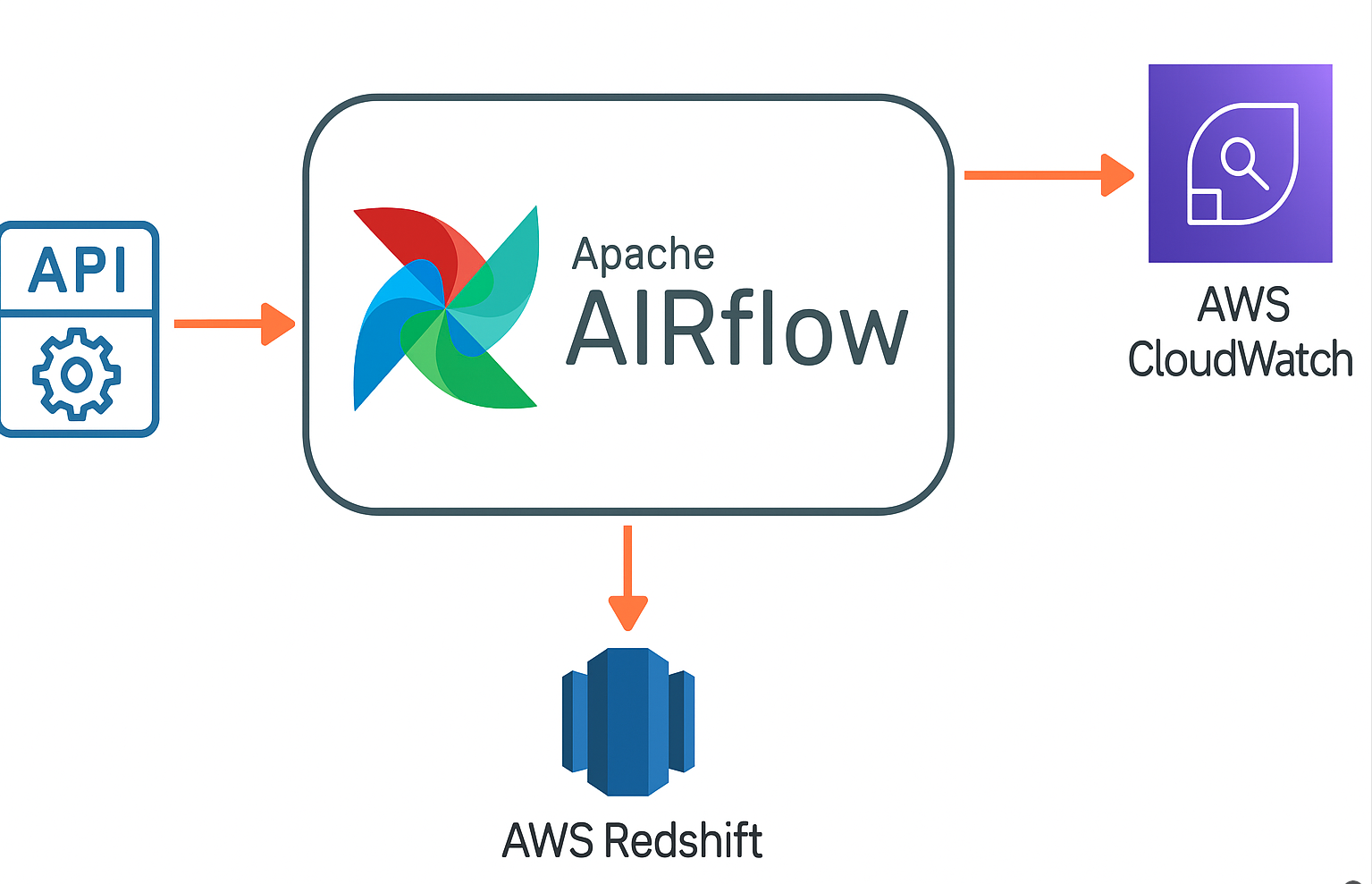

☁️ Cloud ETL Modernization

Scenario: Legacy workflows lacked observability, scalability, and centralized monitoring.

📎 View GitHub Repo

Solution: Built scalable ETL from APIs to Redshift with Airflow orchestration and CloudWatch alerting; standardized schemas and error handling.

✅ Impact: ~30% faster troubleshooting via unified logging/metrics; more consistent SLAs.

🧰 Stack: Apache Airflow, AWS Redshift, CloudWatch

🧪 Tested On: AWS Free Tier, Docker

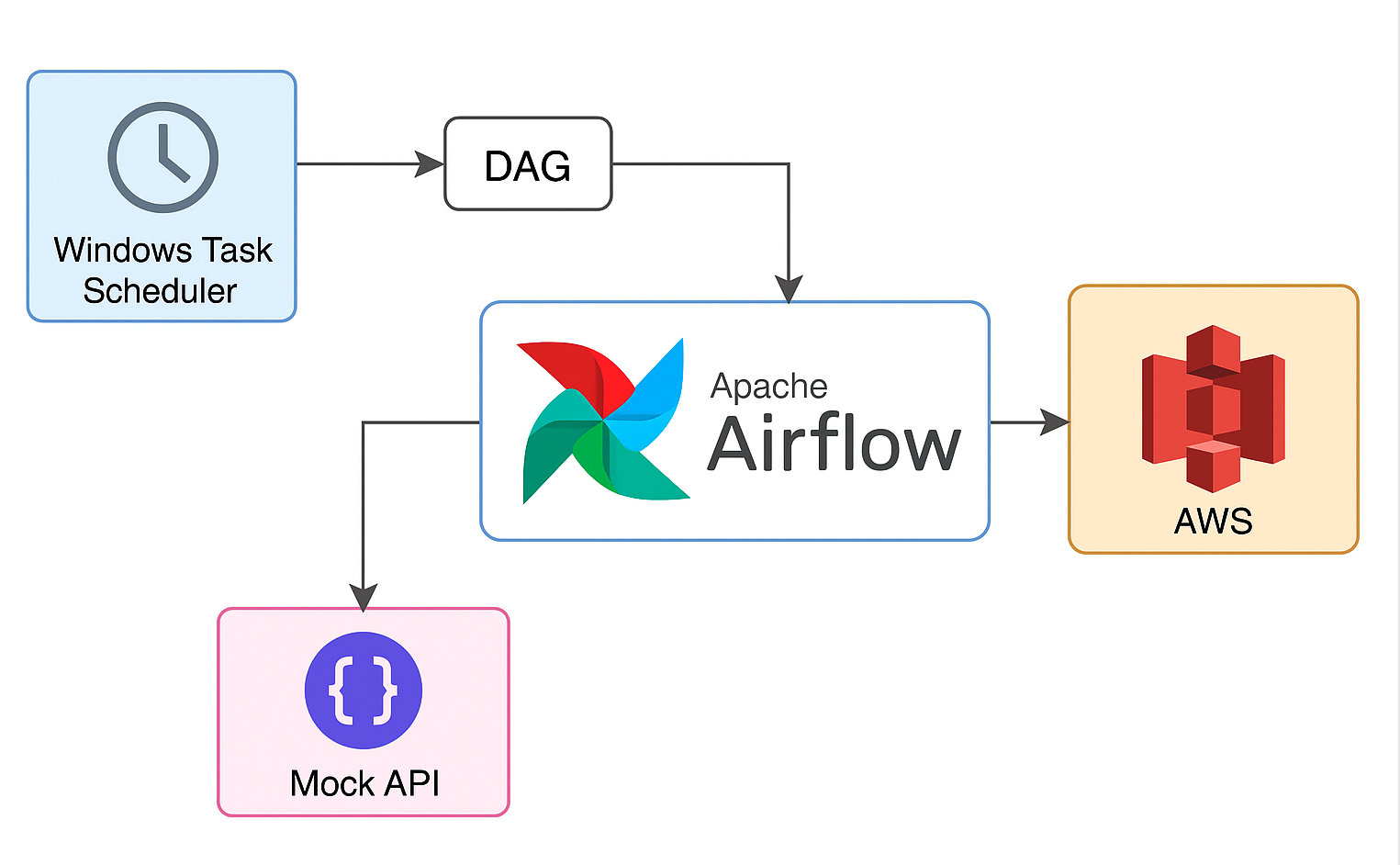

🛠️ Airflow AWS Modernization

Scenario: Legacy Windows Task Scheduler jobs needed modernization for reliability and observability.

📎 View GitHub Repo

Solution: Migrated jobs into modular Airflow DAGs containerized with Docker, storing artifacts in S3 and standardizing logging/retries.

✅ Impact: Up to 50% reduction in manual errors and improved job monitoring/alerting.

🧰 Stack: Python, Apache Airflow, Docker, AWS S3

🧪 Tested On: Local Docker, GitHub Codespaces

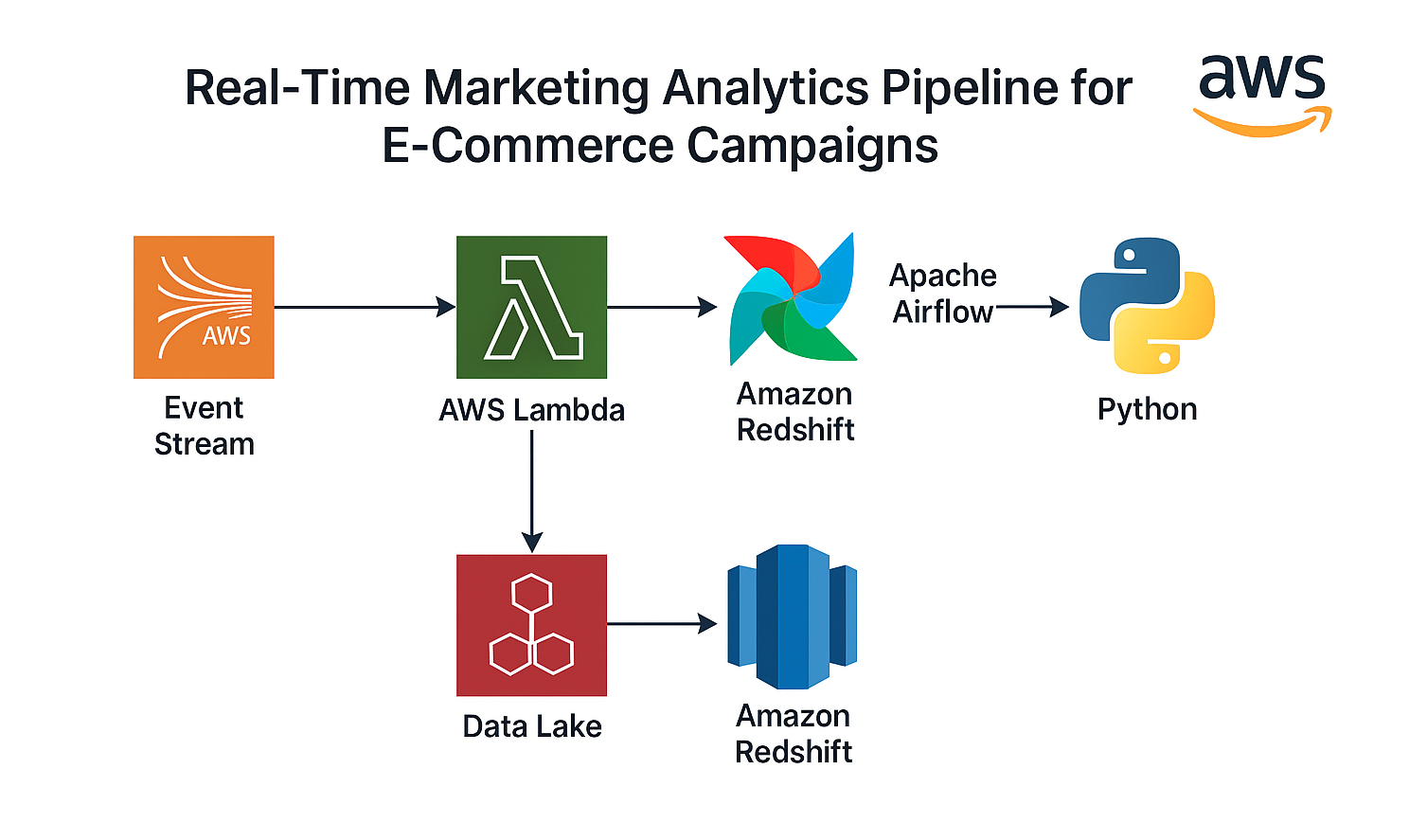

⚡ Real-Time Marketing Pipeline

Scenario: Marketing teams need faster feedback loops from ad campaigns to optimize spend and performance.

📎 View GitHub Repo

Solution: Simulated real-time ingestion of campaign data with PySpark + Delta patterns for incremental insights.

✅ Impact: Reduced reporting lag from 24h → ~1h, enabling quicker optimization cycles.

🧰 Stack: PySpark, Databricks, GitHub Actions, AWS S3

🧪 Tested On: Databricks Community Edition, GitHub CI/CD

🎮 Real-Time Player Pipeline

Scenario: Gaming companies need real-time analytics on player activity to optimize engagement and retention.

📎 View GitHub Repo

✅ Impact: Reduced reporting lag from hours → seconds for live ops insights.

🧰 Stack: Kafka / AWS Kinesis, Airflow, S3, Spark

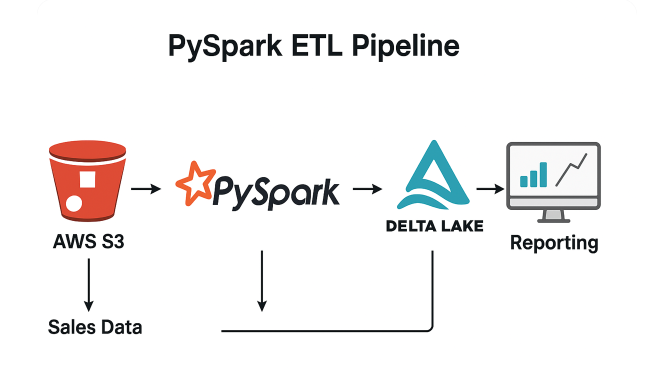

📈 PySpark Sales Pipeline

Scenario: Enterprises need scalable ETL for large sales datasets to drive timely BI and planning.

📎 View GitHub Repo

Solution: Production-style PySpark ETL to ingest/transform into Delta Lake with partitioning and optimization.

✅ Impact: ~40% faster transformations and improved reporting accuracy with Delta optimizations.

🧰 Stack: PySpark, Delta Lake, AWS S3

🧪 Tested On: Local Databricks + S3

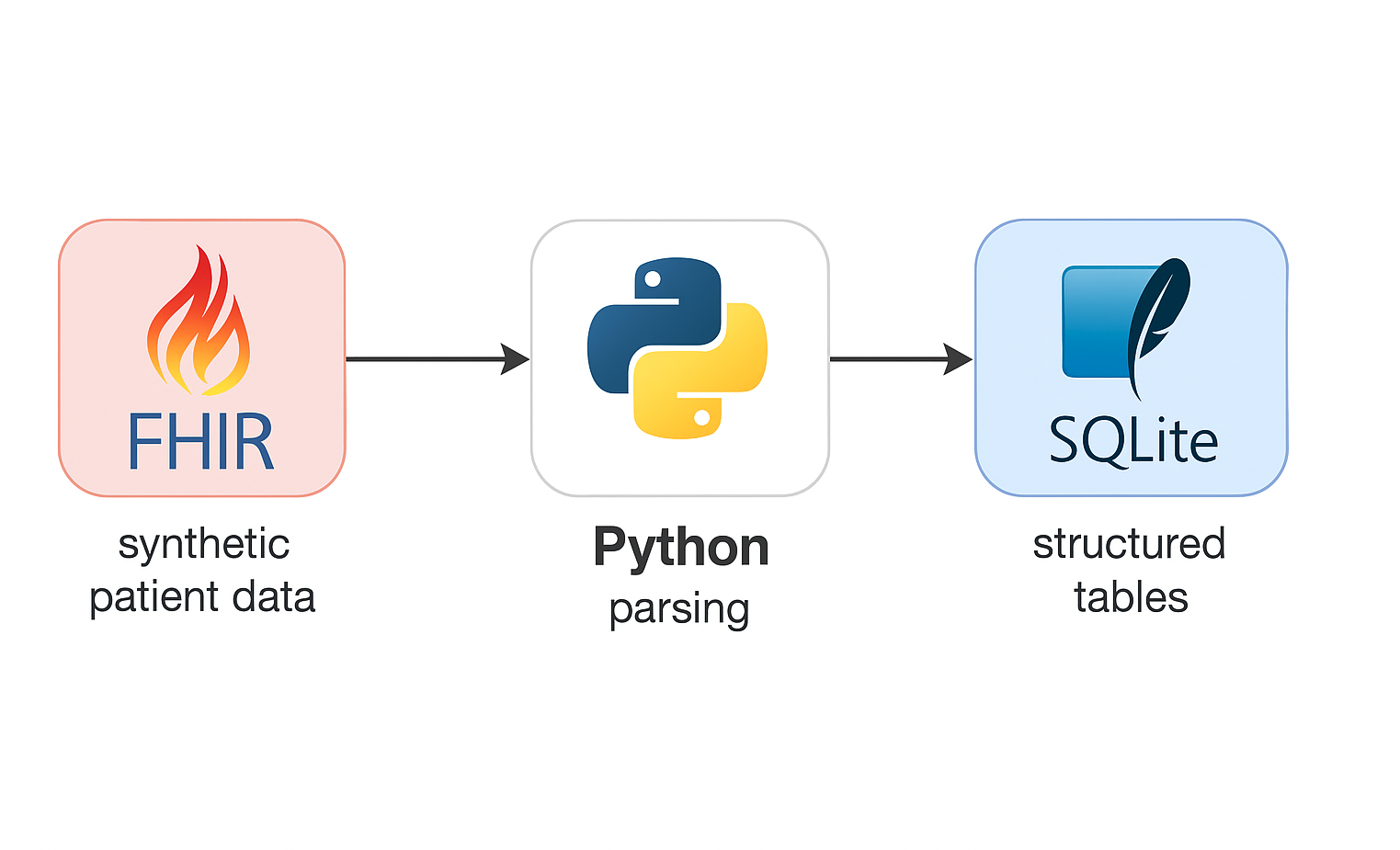

🏥 FHIR Healthcare Pipeline

Scenario: Healthcare projects using FHIR require clean, analytics-ready datasets while preserving clinical context.

📎 View GitHub Repo

✅ Impact: Cut preprocessing time by ~60%; improved data quality.

🧰 Stack: Python, Pandas, SQLite, Streamlit

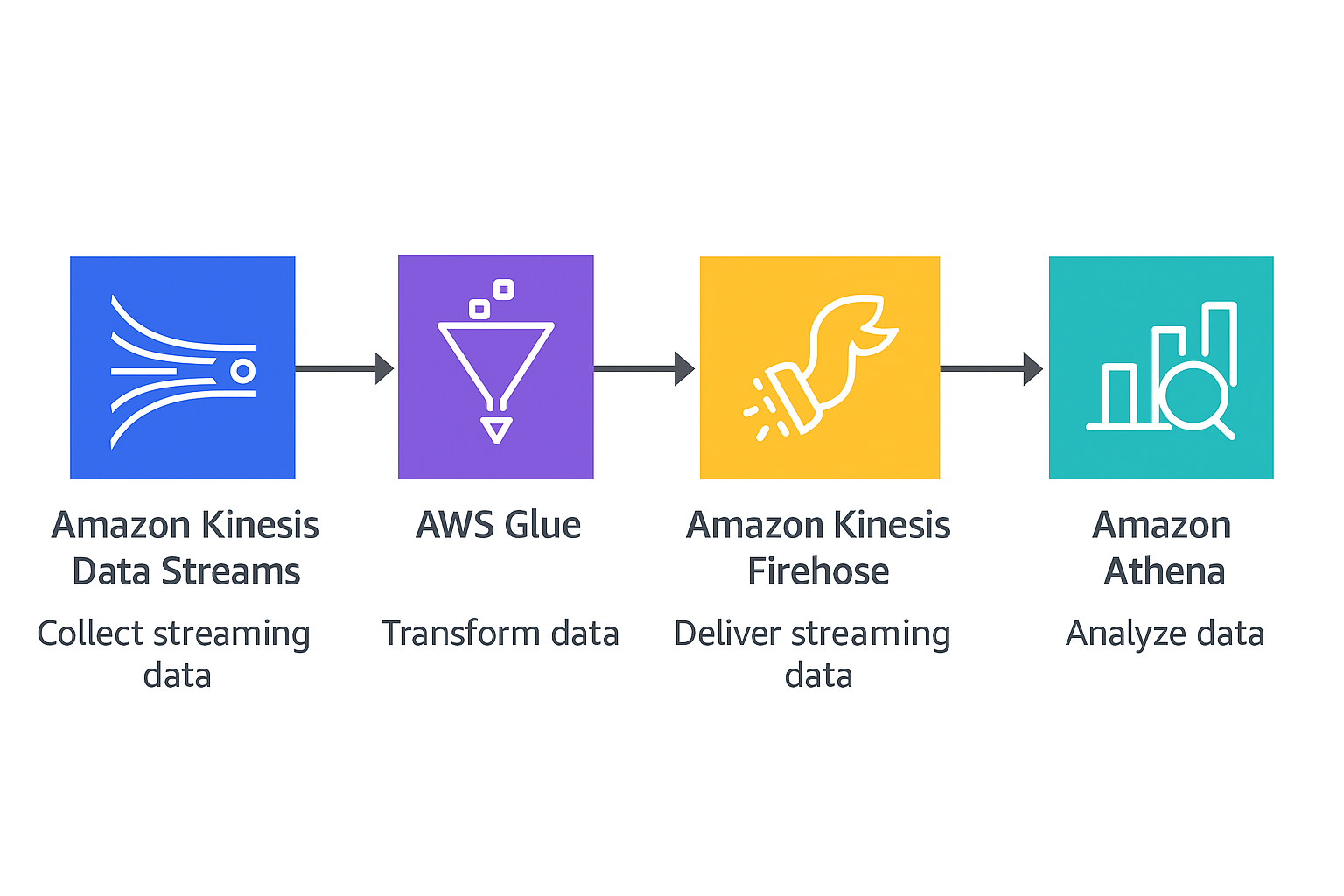

🚀 Real-Time Event Processing with AWS Kinesis, Glue & Athena

Scenario: Simulated a real-time clickstream pipeline where user interaction events are sent to AWS Kinesis, processed with Glue, and queried in Athena.

🧰 Stack: Python • AWS Kinesis • AWS Glue • AWS Athena • S3

✅ Impact: Built a reusable pattern for clickstream and analytics pipelines.

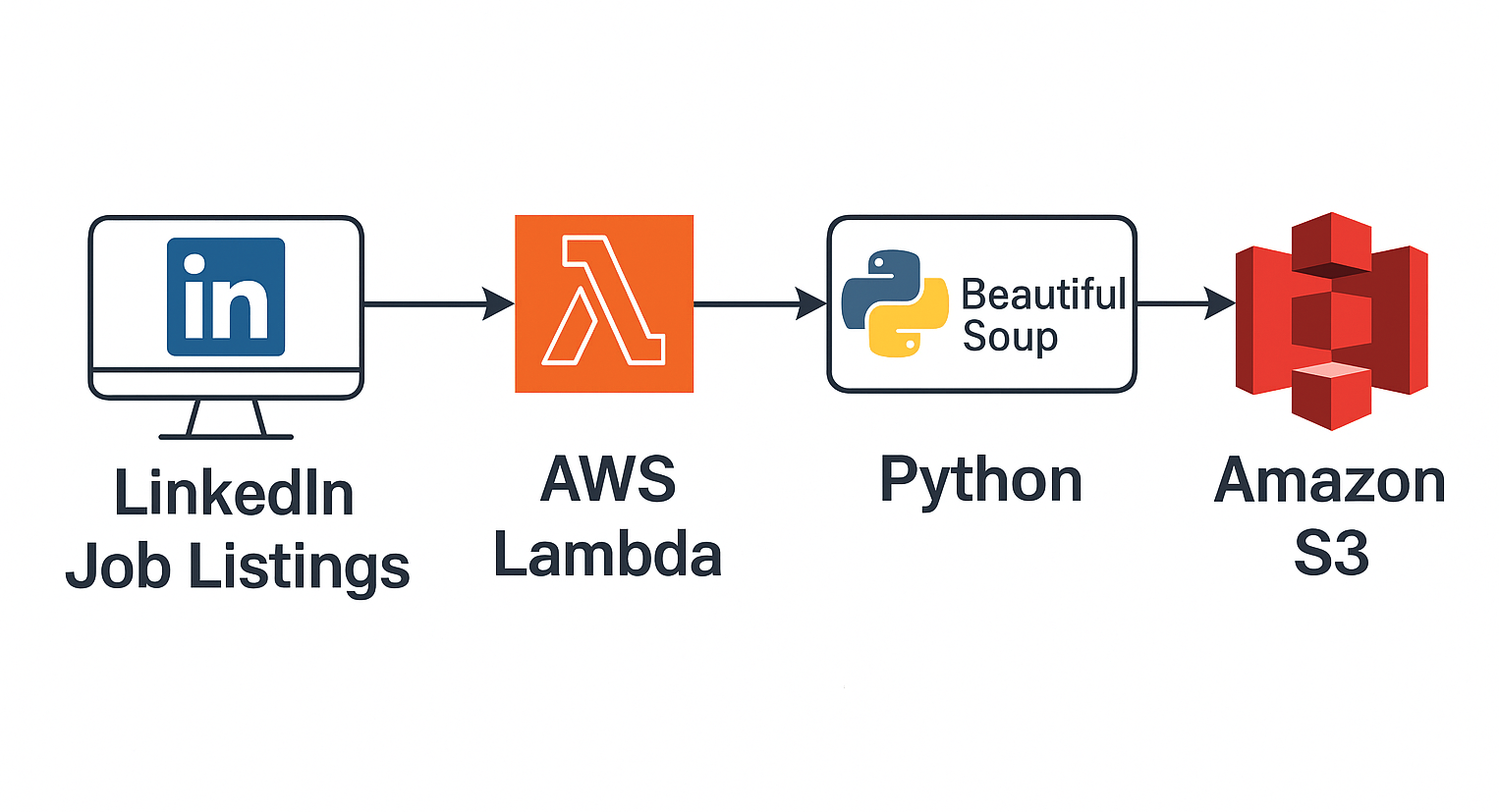

🔍 LinkedIn Scraper (Lambda)

Scenario: Manual job tracking is slow and error-prone for candidates and recruiters.

📎 View GitHub Repo

✅ Impact: Automated lead sourcing and job search analytics.

🧰 Stack: AWS Lambda, EventBridge, BeautifulSoup, S3